Last updated: January 3, 2026

6 min read

https://ie.trustpilot.com/review/blogmanagement.io

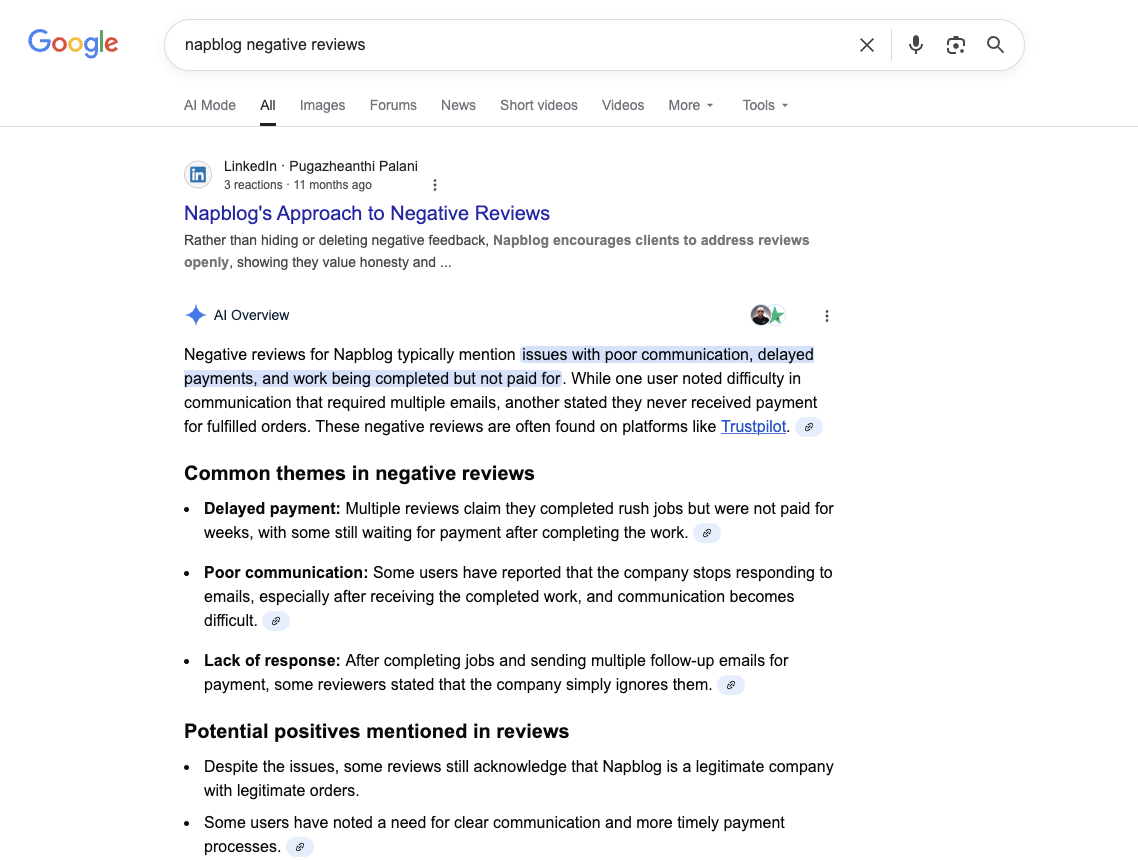

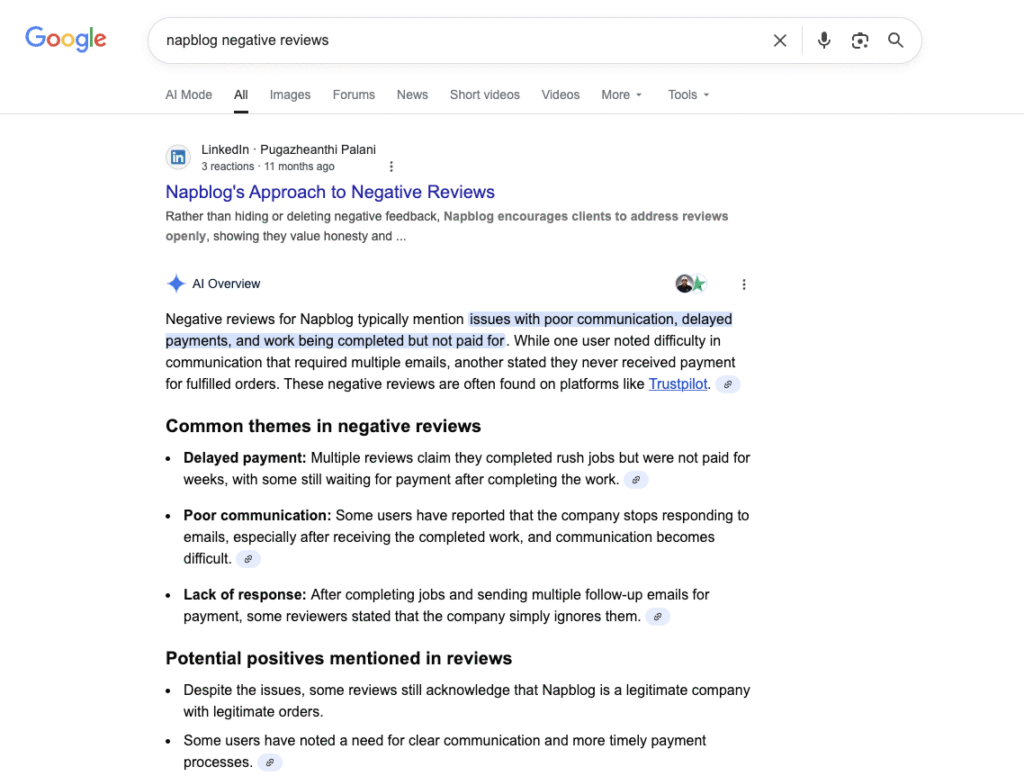

1. When AI Decided to Tell Our Story — Wrongly.

We woke up one morning to find out that Google’s shiny new AI Overview had decided to summarize our reputation for the world.

Except… it wasn’t our reputation.

It was someone else’s.

The AI confidently declared:

“Negative reviews for Napblog typically mention delayed payments and poor communication.”

See the screenshot below — a slick AI-generated paragraph that looks trustworthy, informative, and objective.

Except it’s none of those things.

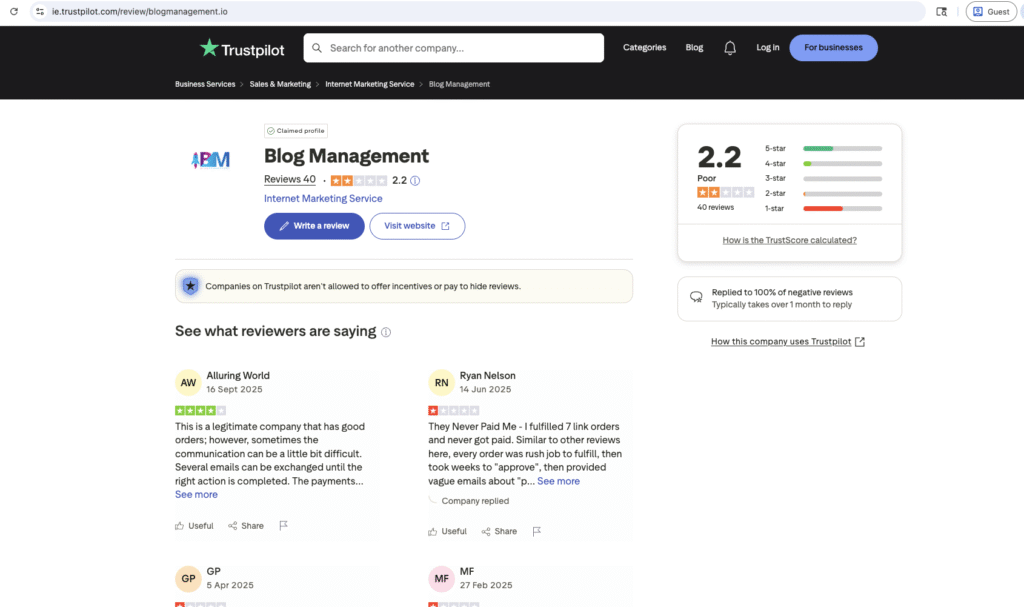

Because those “reviews” don’t belong to Napblog at all.

They belong to an entirely different company listed on Trustpilot — Blog Management.

Yet, there it was — on Google — as if Napblog were being called out for mistreating people we’ve never even worked with.

2. Let’s Be Honest — We’re Angry.

We’re not writing this out of PR politeness.

We’re frustrated. Deeply.

Because this isn’t a small typo or a funny AI misunderstanding.

It’s reputation damage, fabricated by an automated system that doesn’t understand the difference between Napblog and Blog Management.

We’re a small but dedicated digital marketing company built on trust, ethical work, and transparency.

We pay our people.

We communicate openly.

We help clients grow — without selling their soul to shortcuts.

And yet, an AI system that doesn’t know us, doesn’t work with us, doesn’t even fact-check — can casually misrepresent our name to millions.

How are we supposed to trust that kind of intelligence?

3. The Irony: A Company Built on Truth, Attacked by Misinformation.

At Napblog, our whole ethos is simple:

“We don’t believe in noise. We believe in clarity, honesty, and meaningful communication.”

That’s literally what we teach — to clients, coworkers, and the next generation of digital marketers in our coworking program.

And now, we’re being forced to correct a lie told by a machine that scraped the internet, mixed up two entities, and packaged it as “truth.”

This isn’t about vanity. It’s about trust.

We’ve always believed that transparency builds brands.

But what happens when the internet’s biggest AI starts confusing transparency with automation?

4. A Screenshot Worth a Thousand Facepalms

(See attached screenshot below)

This is what misinformation looks like in 2025:

An AI overview that says “Napblog has poor communication,” followed by a “source” that leads to an unrelated company’s Trustpilot page.

To the average user, it looks legitimate — because it’s coming from Google, the internet’s most trusted search brand.

But to us, it’s like watching someone else’s mistakes being stapled to our name in public.

No journalist wrote it.

No editor fact-checked it.

No human took responsibility.

Yet we’re the ones cleaning up the mess.

5. The Real-World Impact of AI Laziness

Let’s not sugarcoat it.

This isn’t just an “AI learning hiccup.”

This is dangerous.

People do trust Google’s AI Overviews — because they assume AI can’t lie.

But it can.

It doesn’t intend to lie — it just doesn’t care enough to know the difference.

It’s not malicious; it’s careless.

And that’s somehow worse.

Because carelessness at this scale isn’t neutral — it’s harmful.

When AI mislabels your company, it plants doubt.

When it spreads half-truths, it corrodes trust.

When it confuses two businesses, it damages real people — the ones who built something with time, sweat, and integrity.

6. Napblog’s Reality: Not Perfect, But Always Human

We’re not saying Napblog is perfect.

We’ve made mistakes. We’ve grown, restructured, learned, and improved.

But everything we’ve built — from our Coworking program to our client partnerships — has been based on fairness, communication, and human accountability.

If someone has a concern, we respond.

If something goes wrong, we fix it.

If feedback is harsh, we face it — not hide from it.

That’s what our original LinkedIn post “Napblog’s Approach to Negative Reviews” (from 2024) was all about — showing that transparency matters more than image.

Ironically, that very article — written to teach honesty — is now being misused by AI as proof of dishonesty.

You can’t make this stuff up.

7. This Is Not Innovation. This Is Automation Without Responsibility.

AI isn’t evil. We actually use it — responsibly — in our automation and marketing work.

But there’s a massive difference between using AI to assist humans and letting it replace accountability.

When Google’s AI Overview publishes something false about a company, who’s responsible?

Not the AI.

Not the algorithm.

Not the data aggregator.

No one.

It’s a convenient circle of denial, where “the machine did it” becomes an excuse for misinformation that hurts real people and businesses.

Innovation without accountability isn’t progress.

It’s chaos with a nice interface.

8. The Emotional Side No One Talks About

There’s also the emotional side — the sheer helplessness of seeing something wrong about your work and having no way to fix it.

We reached out, flagged it, reported it — and got silence.

Because you can’t email “AI.”

You can’t call Google Search and say, “Hey, your machine slandered my company.”

You just sit there watching strangers read something false about you —

and trust that maybe, someday, someone will scroll far enough to see the truth.

That’s not innovation. That’s indifference.

9. What We Want to Say to Google (and Every AI Team Out There)

Please. Slow down.

We understand the race to dominate AI search. We understand the pressure to deploy fast.

But when you’re dealing with public information — people’s names, companies, livelihoods — accuracy must come before automation.

This isn’t about “AI ethics” as a buzzword.

This is about human consequences.

Because while Google’s AI Overview can move on to the next query, small businesses can’t just “move on” from reputation damage.

We have to explain, clarify, and rebuild the trust that AI carelessly shredded.

10. How to Fix It: Our Suggestions

We don’t want to just complain. We want solutions.

Here’s what companies like Google — and every AI system generating public summaries — should implement right now:

- Real-Time Verification — Cross-check entities before publishing summaries.

- Direct Correction Channels — Allow businesses to report factual errors easily and get timely responses.

- Accountability Logs — Display when and how a summary was generated, and by which dataset.

- Transparency Tags — Show users: “This information may not be verified.”

- Human Review Triggers — When a summary involves reputational claims, have a human review before it’s displayed.

If AI wants our trust, it needs to earn it — just like any other business.

11. What Napblog Learns From This

We learned something too.

We learned that even in an AI-first world, human truth still matters more than algorithmic authority.

We learned that branding isn’t just about design or keywords — it’s about being visible and verifiable.

We learned that we must educate our clients, coworkers, and readers to always question AI outputs — because blind trust is the new digital risk.

12. Our Message to the World

To every business reading this:

Don’t assume what AI says about you is true.

To every freelancer, client, and coworker we’ve worked with:

You know who we are. You’ve seen how we operate. You’ve experienced our integrity first-hand.

To our followers and supporters:

Thank you for not believing everything an algorithm says.

Thank you for trusting people over programs.

And to Google — we’ll say this with both respect and frustration:

“You’ve built the world’s smartest search engine. Please make it smart enough to know the difference between truth and copy-paste confusion.”

13. Closing Thoughts: The Human Still Matters

In the rush to automate everything, we seem to have forgotten one thing:

AI doesn’t understand consequences. Humans do.

Napblog will continue doing what we do best — marketing with integrity, creating real connections, and helping brands communicate clearly.

But from now on, we’ll also add one more thing to our mission:

To remind the world that truth still requires humans.

Because the next time an AI gets it wrong, it might not just hurt a brand — it might erase someone’s reputation, someone’s work, someone’s story.

Final Line:

“Don’t trust AI blindly. Verify before it vilifies.”

— The Napblog Team

(Screenshots attached: Google AI Overview displaying false Napblog reviews; Trustpilot source misattributed to Blog Management)